Featured Work

TravisBott

The Making Of An AI-Generated Travis Scott Song

Using the AI tools that were available in 2019, a talented developer / engineer named Drew Wiskus and I set out to make an AI that would make Travis Scott songs. I would be the creative guy with the music knowledge to plug whatever Drew generated into a DAW, and out would come magical AI music.

Drew spent a few weeks scrubbing the internet for data, and basically taught the model to write lyrics like Travis Scott. Kind of. Turns out, in 2019 at least, you had to do a lot of training for an AI to kick out anything remotely cogent in terms of language. This was before ChatGPT, it was like us trying to make our own very specific version of ChatGPT that could rhyme in the style of TravisScott.

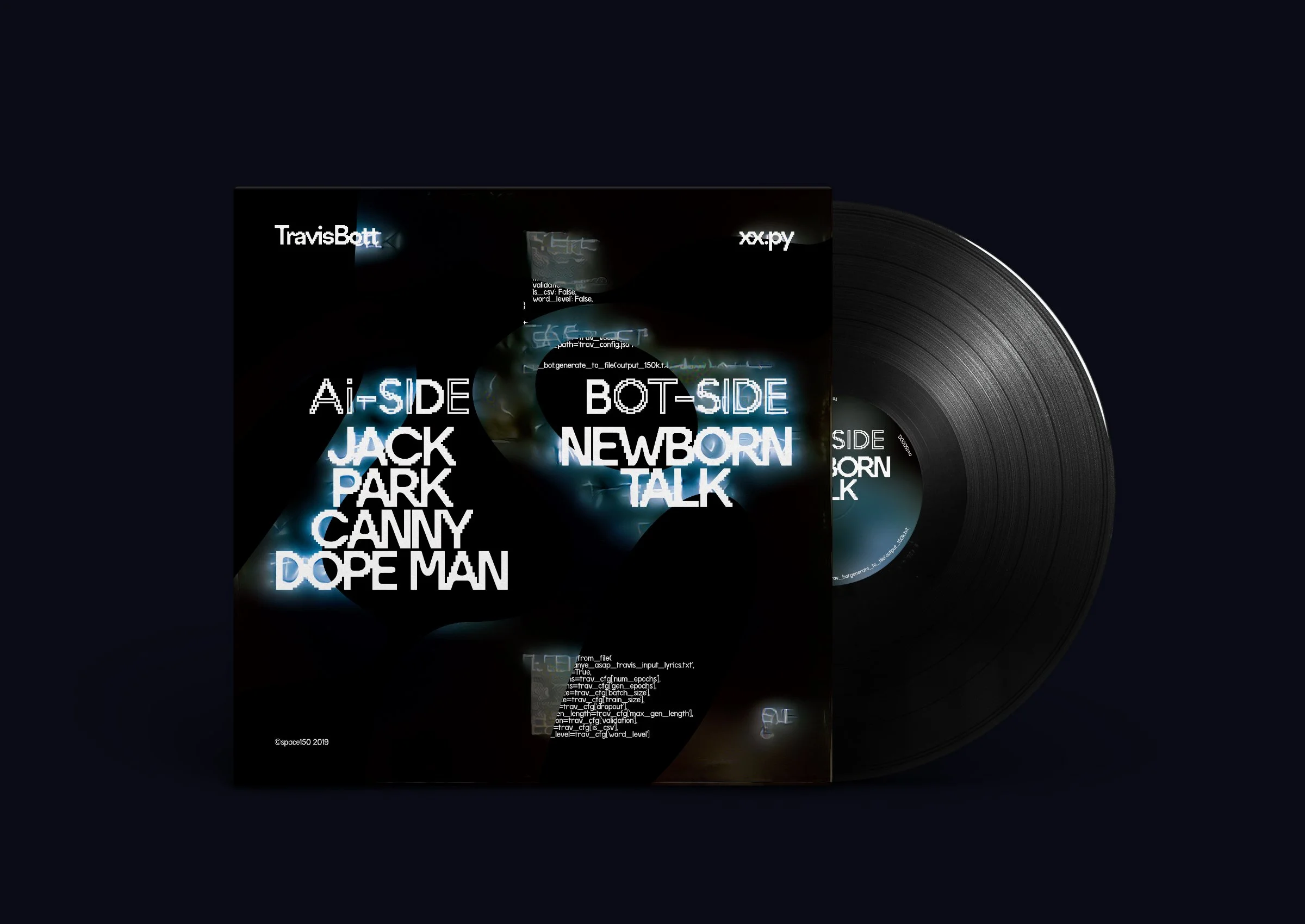

We fed a text-generating GAN lyrics from the entire library of Travis Scott songs ever recorded. We fed it every unfiltered lyric. The GAN spat back nonsense, and the “bot” (who we named “TravBot”) filtered out as much of the nonsense it could to give us at least semi-coherent lyrics that resembled the rapper's surreal visual couplets. What it came up with, after many trials, became “Jack Park Canny Dope Man”

The album cover for the vinyl 45’s we pressed of the song

Still from “Jack Park Canny Dope Man” video

I know you talking trippy on my plane

I just want your first lane

I’m back to the shit it feel my pain

She got a crew on top of my chain

Wasted in the street like a pain

-TravBot (nickname we gave our AI model)

The song title and lyrics were pulled from a 10,000-line-long lyric sheet of unintelligible and hilarious rhymes our little bot had generated. Then we used a similar method to generate similar melodies/chords/bass lines/beats to Scott’s productions, feeding the notes to our GAN in the form of MIDI files. We locked down on a BPM arbitrarily, so that the music wasn’t the kind of chaos you hear in a lot of AI music today.

What most people don’t know and what I think was often assumed, is that AI doesn’t do anything on its own. We don’t have what they call “General Artificial Intelligence” at least not yet. AI was and still is sort of a glorified copy machine -it only puts out rearranged versions of what you put in. To that fact, we knew we had to make a song, and if the AI was left to its own devices, it would just keep generating an endless song with endless lyrics. There had to be parameters. What I’m saying is YES there was human involvement. We art directed the AI. For some people, this was somehow a deal-breaker, in terms of us allowing ourselves to claim this song was “made by AI” which was another interesting turn in this process I didn’t expect (and more about the human need for authenticity later). I shuffled through about 300 AI-generated MIDI melodies in minor keys until I hit on some that resembled Travis Scott’s music. It was exciting to hear these notes, that were truly “played” by AI. Only about 25% of them were any good, but of those it did feel like some kind of magic when those chords and melodies hit my ears.

A screenshot of the AI-generated MIDI in Ableton Live

Article from the Fader after the release of our TravisBott song, wherein they asked for Holly Herndon’s reaction

The release of the song & video and the buzz generated from PR caused more of a ruckus than I expected, but it was a sign of things to come. The agency I was working for at the time, space150, had me work on this in a small team with just one designer and one dev, as it was a passion project and our time was not being paid for, which added to the pressure to make not only something good with AI, but to make it within a pretty short timeline. We wanted it to be 100% AI, we wanted to have an AI-generated voice that could mimic Scott’s own, but in 2019 the only place doing anything like that was a startup called Lyrebird, which quickly pivoted into Descript, an app for podcasters and editors that uses it’s AI voice cloning to fill in gaps in an edit. I hit up Lyrebird with the details of the project and they didn’t reply.

So we fudged some stuff. The voice, for instance, wasn’t AI-generated at all. We hired a rapper named So-So Topic, who I sat with for a two-hour session at a friend’s studio while he smoked a bunch of weed and then after an hour of laughing at the nonsense lyrics, and trying to make sense of it, belted the vocals out in like two takes. “It’s still the weirdest thing I’ve ever been apart of” he said.

Rapper (and voice of TravisBott) So-So Topic, now known as Tommy Raps

We wanted to use AI as a creative tool, though perhaps people’s image was us telling a computer “Hey AI, go make a rap song!” which of course wouldn’t work, and luckily it is still sci-fi to think you can order an AI to create something from nothing. AI is more a glorified copy machine than a sentient creative being. In the end, everything comes from something. Even if you aren’t copying another person’s art directly, any creative professional who’s made anything and put it out into the world understands that we are mostly the sum of our likes, our preferences and our experiences of all the things we’ve ever enjoyed. Just like absorbing music or knowledge is much of what we spend our early lives doing, so does an AI need a shit load of data to output anything of creative value.

In the beginning, we needed to train it on something, so Travis Scott seemed like the perfect cultural icon to pick from. What we had were pieces of AI-generated creative, and we art directed those. In the end, if we were going to follow through with it, it had to be interesting, and at least sort of good, even if the lyrics were nonsense.

Headline from Billboard.com article on deepfake vocals in music

We received reviews from Vice, i-D, Complex, Hypebeast, Dazed and Fader, among others. Every article to varying degrees was jock-riding the doom-hype of what an AI-generated artist/impersonator/digital imposter meant for the music world, what it might mean for the future of musicians & recording artists. Had these guys never heard of Weird Al Yankovic? Couldn’t this AI music just live as a piece of conceptual art, or a playful parody, rather than a cryptic message about music’s potentially doomed future?

This little AI music project seemed to stir some ire in both fans (see Anthony Fantano’s reaction below) and artists (Holly Herndon’s reaction). It came about right as people were starting to resent digital Goliaths like Spotify, as people were made aware of how woefully little is paid to the artists that make the content that they get paid to distribute. By late 2019, the effects of Web 2.0 had reared it’s ugly head, as a fully capitalist profit-driven machine, exploitative and as unfeeling and insincere as Mark Zuckerburg smoking meats live on Facebook. We had learned that Facebook was selling our data, that we were the product. They’d sold us out and now AI was coming to take our livelihood and humanity. Or, that seemed to be the sentiment.

The only critic who actually spoke to the musical aspects of the project was Anthony Fantano, who’s review was the most honest and accurate. It was a badge of honor to see the result of this AI project being reviewed by “the internet’s busiest music vlogger”, it felt almost like validation that the song had achieved some level of legitimacy, even as he deftly unravels the claims to authenticity both in terms of the balance of human vs. AI involvement in the song’s creation, and that of a creative work that has been AI-generated. Keep in mind this was all before Midjourney and Dall•E came into existence.

Still from Anthony Fantano’s review of the TravisBott song on his YouTube channel

Fantano does us a favor by at least getting into the sonic details of the song, and while ultimately he said it sounded like a “cheap knockoff of Travis Scott,” I can’t argue with him. Would meaningful and cogent lyrics that tell a story have helped the song’s chances of not feeling like a knockoff? Perhaps. Unfortunately for the project, when a song is presented as “AI made this,” it seems to close a door in people’s minds that prevents them from just vibing with it. If you are aware that AI had a hand, it becomes an intellectual thing. It’s a bit of fourth-wall removing, makes it too cerebral in a genre which is meant to be visceral with lyrics that champion bravado erring on fantasy. Rap songs demand catharsis. It’s music of the amygdala, 80% visceral after which the remaining 20% is split up amongst lyrics that paint broad strokes, infer a kind of sexualized violent mythology, wrapped in satisfying madlibs that give a kick to your dopamine receptors when you put the meaning together a moment after solving the lyrical puzzle. Even writing this way about rap feels odd, like how an AI might describe the music to another AI.

Fantano’s reaction mirrors that of most people when encountering a work while being told up front, -by the way, an AI made this. We are still deep in the uncanny valley when we encounter anything made by AI, it is novelty at best, and far from being taken seriously. Music is one art form that every human has an opinion on and many consider themselves experts on, at least in regards to their own taste and what a song in a certain genre calls for. With AI-generated music our defenses immediately go up if we know that is what we are hearing, not wanting to be tricked by something not of flesh and blood. Music is sort of sacred in that way. It speaks to our lived experience, and codifies our memories. It makes moments more meaningful. And so how could AI even attempt to touch that essence of what is most human? I’m rambling about this because I think it is at the crux of what we don’t like about AI music. I happen to agree with the sentiment, and while working on this project I came to realize that at least creatively AI will never eclipse us. Our critical eye focuses laser-sharp on the things that might be lacking, because really we know that being human is sacred too.

AI will never do what we humans do best, which is judge something based on it’s intellectual, emotional and aesthetic qualities, in other words what is good.

Billboard UK wanted to do an interview and over a Zoom call I explained the process and my own thoughts on things while working on the project. Billboard UK quoted me as saying:

We wanted to use AI and deepfake audio in a truly creative way, and not in that doomsday machine-kind of way that everyone was predicting. We wanted to show its potential [as a branding tool].

-which was the first and only time I've ever been quoted by a magazine. In the end, I think AI is going to amount to a helpful creative tool, and if people use it to copy the likeness of other things, then it really is nothing more than elevated fan-fiction.

Written by Josh Lundquist, 2023